CST Blog

Hate Fuel: the hidden online world fuelling far right terror

11 June 2020

This report highlights four social media platforms where thousands of violent antisemitic videos, memes and posts are regularly shared by far right extremists who have migrated away from mainstream sites like Facebook and YouTube. Each of these four platforms – Gab, Telegram, BitChute and 4chan – was either set up or operates as a direct challenge to the main social media companies, citing free speech or privacy as justifications for their role in the promotion or distribution of hateful, inciteful and often violent content. They provide relatively unregulated online spaces for extremists to share content that celebrates and encourages hatred and murder.

The content revealed in this report is so extreme, both in terms of the violent imagery we found and the quantity of explicit antisemitism, that it would be irresponsible for CST to publish the report in full. Instead, we are sharing it privately with Police, government and other counter-extremism officials and experts, and we are publishing a sample of the content of the report in this post.

The report shows:

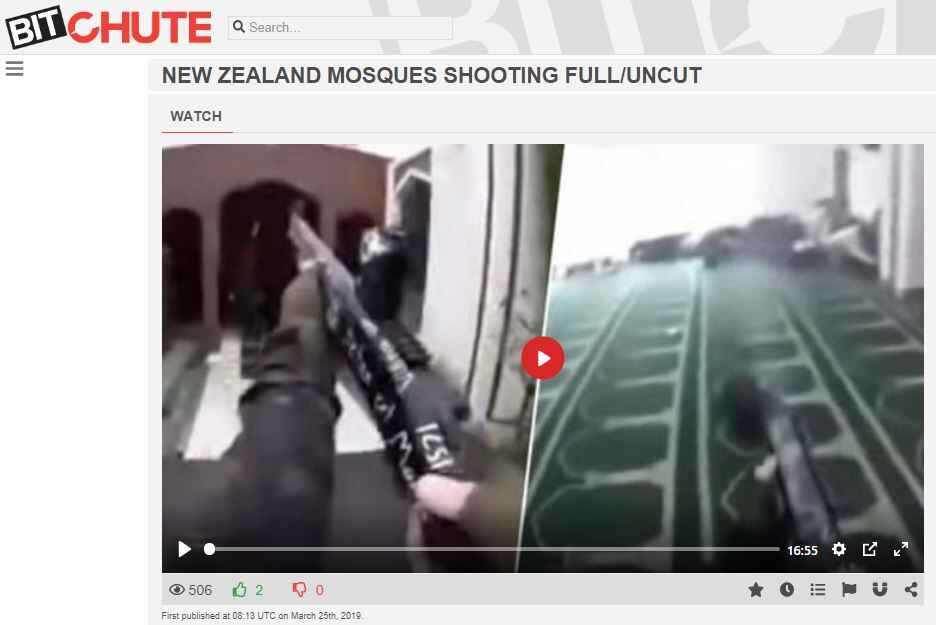

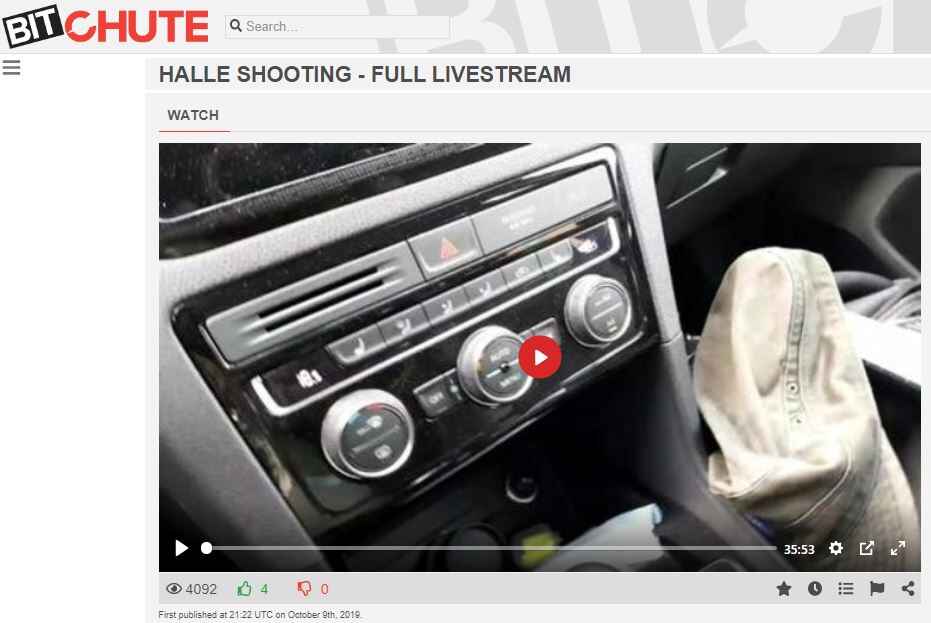

- BitChute, a UK-registered company with British directors, hosts several videos of actual far right terror attacks; propaganda videos from the proscribed terror group National Action; and thousands of hateful antisemitic videos that have been collectively viewed over three million times

- Gab is host to a dedicated network of British users called “Britfam” that has 4,000 members and approximately 1,000 posts per day, which far right extremists use to circulate racism, antisemitism and Holocaust denial

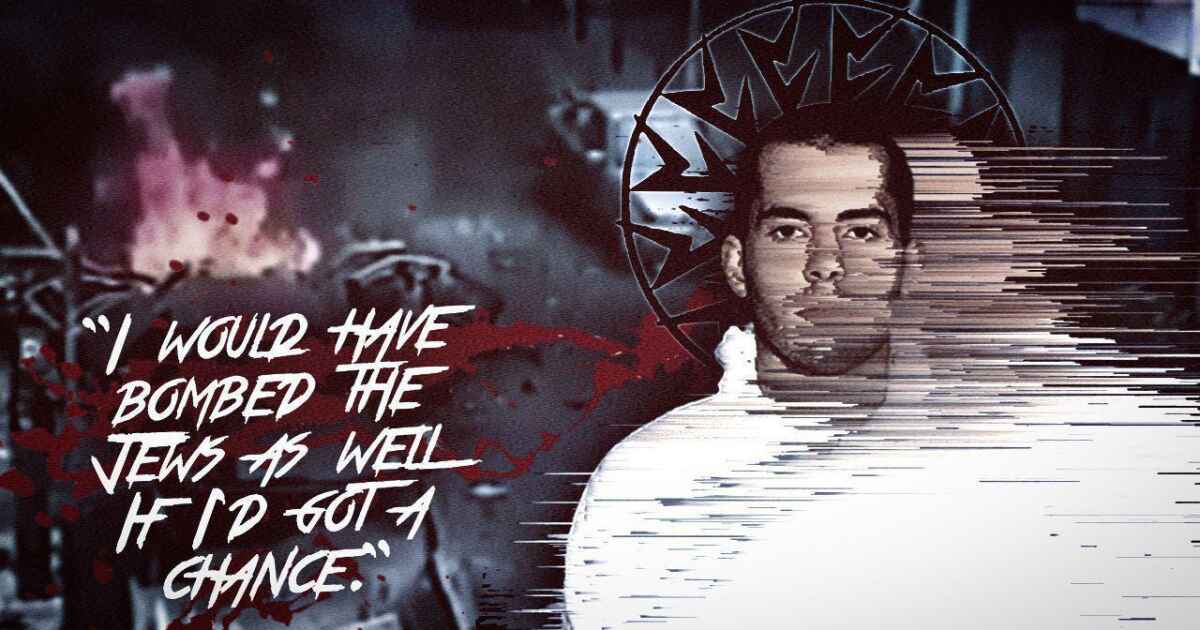

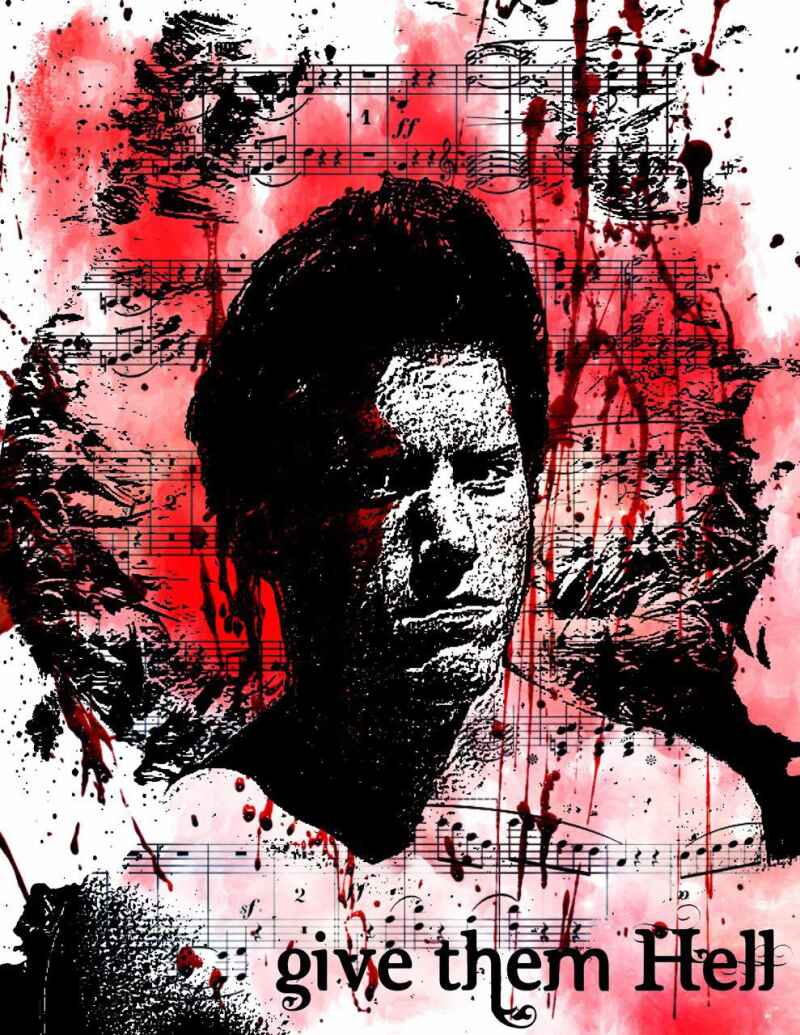

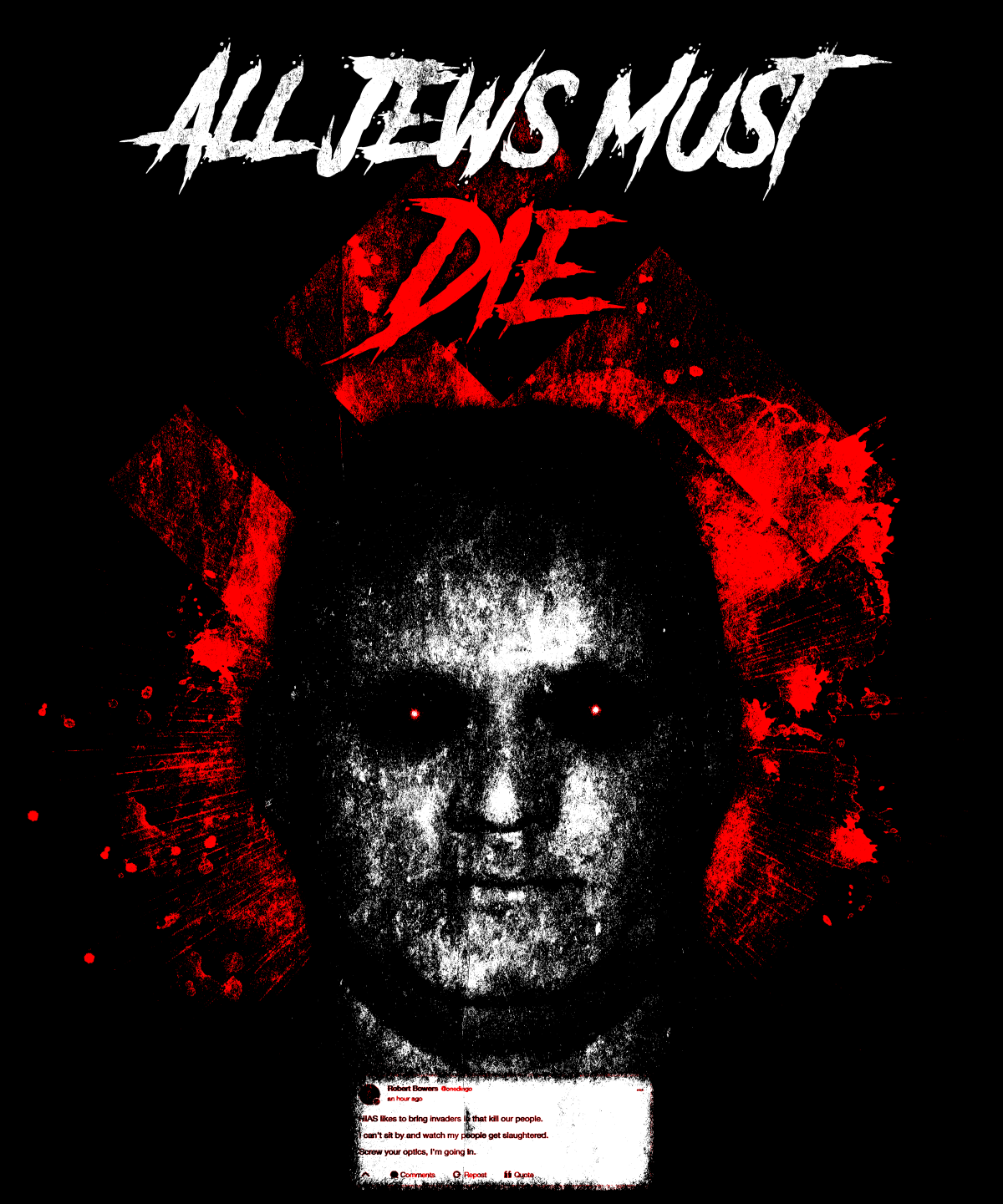

- Telegram hosts several images and posts celebrating far right terrorists, including British terrorists Thomas Mair and David Copeland, alongside other images calling on its users to kill Jews

- 4chan’s /pol/ discussion board hosted at least 26 different threads that contained explicit calls for Jews to be killed and had the words “kill” and “Jews” in the title, all created in the twelve months following the October 2018 Pittsburgh synagogue attack (this total does not include threads titled in different ways, e.g. “Kill Kikes” or “Kill Jewish people”)

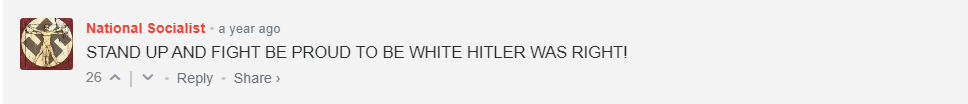

- Comments on BitChute are hosted through Disqus, a widely used mainstream third-party comment-hosting service. CST found hundreds of violent, antisemitic comments on some of the most popular hate fuel videos, all facilitated via Disqus; this is likely to be a fraction of the amount of hateful comments across BitChute as a whole

Shockingly, all the material in this report is easily accessible to anybody who wishes to find it: none of it is hidden behind password-protected firewalls or in secret forums. CST has produced this report because we fear the quantity and spread of this incitement poses an urgent and ongoing terror threat to Jewish communities. It shows the challenge of policing hateful extremism in the UK; the need for international cooperation in tackling right wing extremism, similar to that deployed against jihadist propaganda; the responsibility of platforms (and their directors) for illegal content they host; and the urgent need for regulation of all online platforms, whatever their size.

The glorification of hatred and violence, and the veneration of martyrdom, have been defining features of fascism and Nazism since their earliest days. Nearly one hundred years later, neo-Nazis around the world are repeating this with murderous consequences, in a shocking example of the synthesis between global online networks, hateful individuals and actual terrorist attacks.

The “hate fuel” revealed in this report consists of online memes, videos and slogans that celebrate previous terrorist attacks on synagogues, mosques and churches, and encourage others to follow suit. This is a global movement that has already sparked terrorist attacks on synagogues, mosques and other minorities in Europe, north America and New Zealand. The perpetrators of those murderous attacks are held up as heroes by right wing extremists around the world, who celebrate their deeds and encourage each other to emulate them.

All four platforms examined in this report host copies of the video that Brenton Tarrant livestreamed online as he murdered 51 worshippers at two mosques in Christchurch in March 2019. Some copies of this video have remained on these platforms for over a year and have been viewed thousands of times. Other content praises Robert Bowers, who murdered 11 people at the Tree of Life synagogue in Pittsburgh in 2018, and John Earnest, who murdered one worshipper at the Chabad of Poway Synagogue in San Diego county in 2019.

Even though far right terrorist attacks are perpetrated by lone actors in different continents, they view themselves as part of a single global movement, with its own online language and subculture that is developed and sustained on these platforms. Specific organisations and national identities are less relevant in what has become a genuinely organic, grassroots global subculture.

The filming and livestreaming of terrorist attacks has become an integral part of far right attack planning as it provides new fuel for the hatred that drives this movement. The perpetrators of successful attacks are celebrated and glorified via striking imagery and memes; their videos are designed to mimic popular computer games, particularly first-person shooter games, in which actual deaths are welcomed as ‘high scores’.

Much of this online hate fuel promotes dangerous conspiracy theories about Jews and other minorities: the same conspiracy theories that have inspired deadly attacks around the world in the past two years, including those targeting synagogues in the United States and in Germany. Central to this is the ‘Great Replacement’ conspiracy theory, that alleges Jewish organisations and synagogues are using mass immigration to undermine white Western nations. One widely-circulated infographic lists over 20 UK Jewish organisations that it claims are orchestrating immigration and the “extermination” of the white race, and urges far right activists to “Wake Up Before It’s Too Late”.

This report is intended to raise awareness of the deadly consequences of what happens when such material goes unchallenged: the hateful content contained in this report is only a small fraction of what is generated by this online hate community. The consequences of inaction are clear. Until this hate fuel is challenged and comprehensively removed, it is likely that further acts of violence will continue or worsen.

If you would like to receive a copy of Hate Fuel: the hidden online world fuelling far right terror, please contact CST to request a copy.